The last

time I wrote a blog, I was experimenting with the Pandas framework in Python while exploring the use of (open) data. However, the example I was working on at the time had little to do with my work

as a structural engineer. So I thought it high time to look for a topic where I

could experiment with data analysis within my field of work. Only, which data

is suitable to get started with?

|

| (image source: https://www.stitchdata.com/blog/best-practices-for-data-modeling/) |

In the past year, through my appointment at TU Delft, I have been able to guide a number of students with their graduation projects. An increasingly popular subject in these theses in recent years is the aspect of sustainability, a subject with many, many facets. Students have done and are doing research on flexibility and adaptivity of buildings and structures, demountability, reuse of components from existing buildings, etc. And, of course, the impact that the use of raw materials has on the environment.

Because

the latter was relatively unknown territory for me, I thought it would be good

to dive into the subject more, and particular the areas where a structural engineer

can make a difference: material use. Moreover, the subject turned out to be very

suited to combine with (BIM) data analyses. That’s how my next project ended up

being a proof of concept for automation of a CO2 footprint calculation for my

projects!

The Base: Python, Pandas, en Revit schedules

For this exercise I chose to use exported Revit schedules for the required building information, and Python with the Pandas framework for processing and editing this data. Of course, it’s also possible to do this in Revit itself, by hooking up to the Revit API, or by using visual programming in Dynamo for example.

|

| Screenshot of a Floor schedule, as exported from Revit to Excel |

However, I believe that the power of schedules is underestimated a lot. "Big BIM", "Common Data Environments" and "Digital Twins" are fashionable concepts, often used for teasing data oriented articles in the industry, but practice more often than not is not that sofisticated, for a variety of reasons. I ask you, which engineering firm always enriches its BIM models with (3D) reinforcement, the governing loads on elements, or the environmental impact of each separate element?

Data exported to Excel however is the complete opposite. A cost consultant can use this as a basis for the calculation of building cost, a sustainability consultant can allocate the volumes of materials for certain BREEAM calculations, and a structural engineer can use the extracted geometry to automate the calculation of the critical steel temperature of steel elements in a project. And all this can be done without having to use specific (pricy!) software or have experience with the software in which the project is modelled.

The

above argument is also valid in my opinion for the implementation of Python: it

is free and can therefore be used by everyone! In addition, it in itself is a very

powerful tool, and for a programming language relatively easy to learn. It is

not for nothing that the use of Python increases significantly with complanies

as well as educational institutions like TU Delft...

Back to the environmental impact...

In

order to be able to perform a good calculation, the information from the Revit model

(geometry, quantities, materialization) needs to be combined with information on

the environmental impact of parts and materials. Unfortunately, the database of the Dutch Foundation “Stichting Nationale Milieudatabase” turned out to be not open source (missed opportunity!), so I chose to feed the

necessary information for this proof of concept from a separate Excel file.

Currently, in my proof of concept, the added parameters are specific weight and CO2 footprint of the (generic!) materials used in Revit, and the global reinforcement quantities for concrete structures, as often determined in the initial phases of the design process. Of course, this information can be further expanded on, for example with intended compositions of concrete mixtures, type of fire-resistant cladding for steel structures, etc.

|

| Additional tables, to be combined with the Revit schedules |

Python and Pandas at work

I won't

go into the actual Python code this time. A link to the proof of concept script

can be found at the bottom of this blog as usual. This time I want to reflect

on the power of Python and Pandas in relation to data processing.

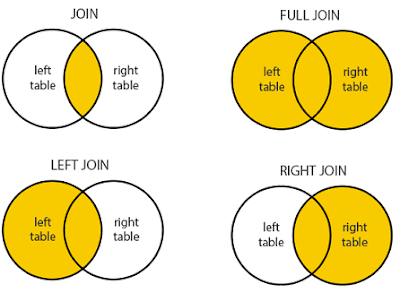

Pandas as it turns out supports data processing principles that have been applied for decades already in database languages / software such as (My /MS) SQL and Microsoft Access. It is for example possible to combine tables with each other based on so-called (unique) “IDs” or "keys".

|

| (image source: https://www.dofactory.com/sql/join) |

In this proof of concept, two different combinations have been made to arrive at the intended output:

- The material data (Specific gravity, CO2 footprint) is linked on the basis of 1 parameter: (Structural) Material;

- The data relating to the concrete structures in the model is linked on the basis of 2 parameters: Family (element type) and (Structural) Material. For example,

- a distinction can be made between cast in-situ walls and floors, which are usually not reinforced the same, or have the same concrete mixture composition, and therefore do not have the same contribution to the environmental impact;

- the same goes for prefabricated concrete, which usually has a larger CO2 footprint due to the higher concrete strength and/or necessity for a faster hardening process (in the factory).

The concept of this table merge for this proof of concept is sketched in the image below:

|

| Screenshot of the Floor schedule, stripped and enriched with the CO2 footprint data |

This data can then be easily exported to Excel again using Pandas, after which this data can be further used for calculations, or, for example, visualizations of the data using Power BI:

|

| Data visualisation in Microsoft's PowerBI |

This way you can see that with little effort you can still significantly enrich the data from a BIM model. And the possibilities are very extensive: similar workflows can also – and with the same ease – be set up for e.g. cost calculations, BREEAM (WST) calculations, etc. etc. And all this without you having to be a (BIM software) data expert to fill and manage the BIM model!

Have a

look at the script via the Gist link below. And of course all your comments or

questions are welcome!

https://gist.github.com/marcoschuurman/cea6fff469430e94f0ec42ebc3c1fc61